Truth: I think human language is a frakin' awesome system.

(

You can hear me talk more about it

in an excited fashion here,

here, and

here.)

I'm fascinated by the question of how to figure out the underlying systems in a language based on the observable data. Because it's hard.

Really, really hard.

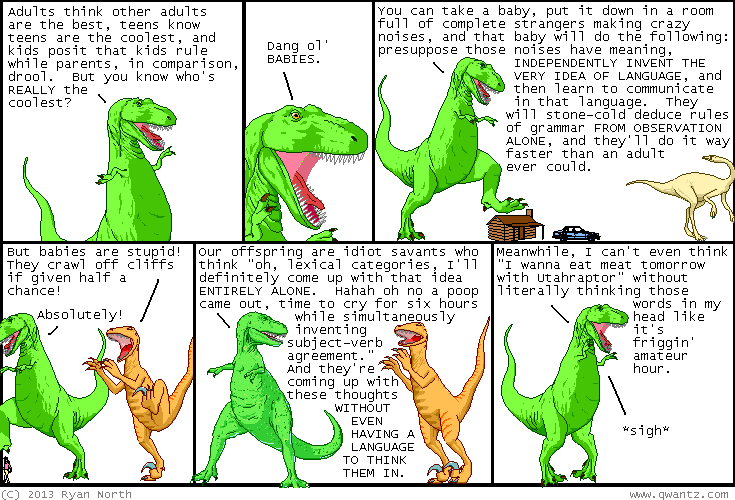

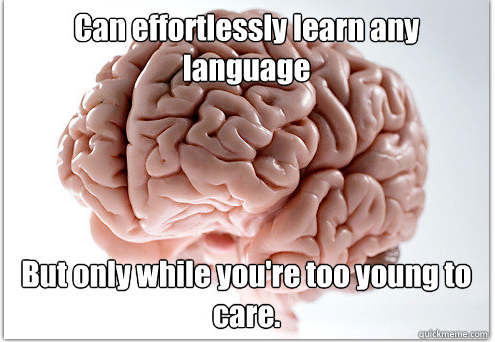

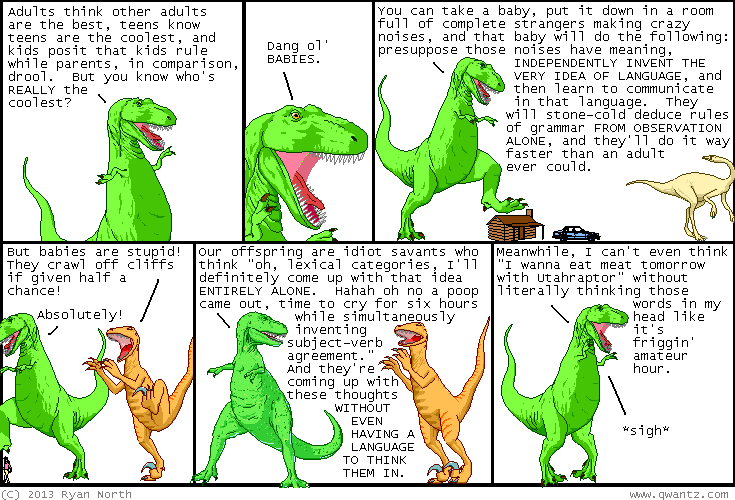

Why? Because lots of the system components interact, and the data are often noisy. Yet children only need a finite amount of time to figure out all the complexity of their native language (you can carry on quite a reasonable conversation with a five-year-old), and they do this despite being significantly less cognitively capable than adults.

http://www.quickmeme.com/meme/36f39x/

http://www.quickmeme.com/meme/36f39x/

http://www.qwantz.com/index.php?comic=2479

http://www.qwantz.com/index.php?comic=2479

How does this process work, especially in an immature mind with cognitive limitations? What information needs to already be available, and what needs to be learned from the observable data? How could someone (or something, if we're thinking of automated systems that may benefit from the insights from human learning) figure out the complexity of a system like this?

The majority of my work uses computational modeling as the tool of exploration, with as much empirical grounding in available data as I can muster. This translates to a variety of interests, including:

- computational models of human language learning (especially ones incorporating discrete representations with probabilistic learning methods like Bayesian inference)

- sophisticated quantitative analysis of information in children's input and output

- more applied computational work that tries to extract non-linguistic information about the world from its linguistic signature in the available input